The project we worked on for the ATMOS Tech Expo was a Ball Balancing Bot. The objective of the project was to balance a ball on a plate.

The principal methods employed were Digital Image Processing and PID.

Digital image processing involved using OpenCV to process the image of the ball, obtained through an overhead camera, and track its position on the plate. For image processing we make use of OpenCV 2.4.9 with C++.

The functions which are used to detect the ball are :

1. Dilate

2. Erode

3. Gaussian blur

4. Houghcircle

After detection of circles, we find the co-ordinates of the center of the circle w.r.t. to a fixed Cartesian system and then send it to Arduino UNO using serial connections.

On obtaining the position of the ball relative to the plate, the PID control system was implemented to control the angle of the servo motors, connected to push rods, thereby, adjusting the plate to balance the ball.

PID stands for Proportional Integral Derivative.It is a control feedback loop mechanism widely used as a control system in industrial control systems.

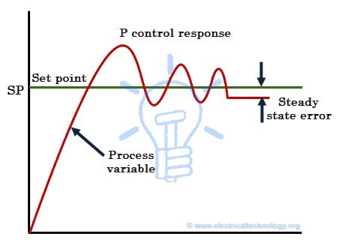

Term P is proportional to the current value of the error e(t). It produces a correction which is proportional to the value of the error.

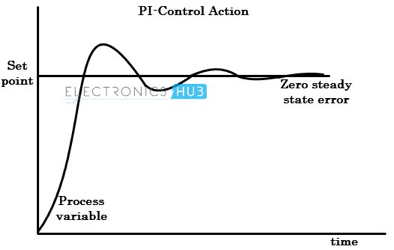

Term I accounts for past values of the error and integrates them over time to produce the I term. This ensures that the sum of errors is zero by producing a counter effect to bring it to steady state.

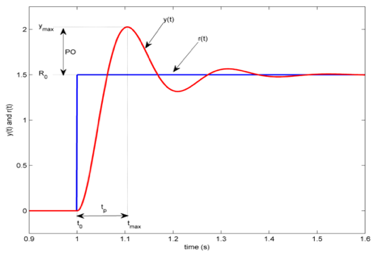

Term D is a best estimate of the future trend of the error, based on its current rate of change and creates a dampening effect.

The components we used are: Wood(for the base plate), Web Camera which was attached to the arm of the stand directly over the plate,2 Servo Motors with attached Push Rods, Universal Joint(and extension rod)- on which the plate rests, Acrylic Sheet (plate), Arduino UNO

The complete code is available in the attached link:

https://github.com/abhishekkhurana12345/Ball-Balancing

This project was done by the PHoEnix Tech Team.

Keshav Sharan 20171529

Jay Karhade 20180852

Abhishek Khurana 20180621

Arpit Anwani 20180277

Mohit 20180518

Rohan 20180590

Ishika Bhattacharya 20180421

Om Kulkarni 20180518